When we talk about generative AI, two names come to mind: ChatGPT and Google Gemini. Both their capabilities have advanced over the years as their developers continue to release their newer versions.

Recently, on May 13, 2024, OpenAI, the corporation that developed ChatGPT, released its much newer and smarter version, named ChatGPT-4o. On the other hand, Google released a premium version of its chatbot, called Gemini Advanced. Google claims that this version is much quicker and provides better results than the previous one.

Which of the two models should you choose if you’re looking to summarize a piece of text? Both are capable of doing that, but which one works better? Well, in this article, we’ll be answering this question. We’ll conduct a series of tests, each of which focuses on a specific type of summary that a particular audience usually needs.

For example, students need an academic summary, whereas professionals usually need an executive summary. So, by the end of this article, we will have seen which language model works best for each type of summary, and based on the overall results, one winner will be decided. The linguistic features of each output that the models generate will also be taken into consideration while deciding the winner.

The first test that we’ll throw at both language models is the creation of an executive summary. A business proposal will be provided to ChatGPT-4o and Gemini Advanced to see which one can better distill key business information for business decision-makers.

That said, the prompt that we’ll provide both models is as follows.

Prompt:

"Provide a concise executive summary of this business proposal. Focus on the primary goals, strategies, projected outcomes, and key metrics."

Running the prompt through ChatGPT-4o, it provided a very elaborate summary. It was too long and definitely not what we were looking for. So we had to ask the chatbot to make it more concise while removing unnecessary stuff not once but twice. Here is the final output that we could get.

ChatGPT-4o Output:

Conversely, the output summary provided by Gemini Advanced against the same prompt is as follows.

Gemini Advanced Output:

Seeing the output of both chatbots, we can deduce that both summaries cover the primary goals, strategies, projected outcomes, and key metrics accurately.

ChatGPT-4o provides a bit more detail but had to be told to make the summary more concise twice. It even mentions the name of the management team, something that Google Gemini omitted in its draft.

However, Gemini did create a good summary in the first go and we didn’t have to ask it for revisions. While the summary is not as comprehensive as ChatGPT-4o, it is still quite good. Enough is covered to convey the core message effectively to the readers, all without any revision.

Considering the aforementioned things, we’re leaning a bit towards Gemini Advanced in this test. Additionally, it is worth mentioning that the summary generated by Gemini Advanced was much easier to understand for an average professional. When it comes to readability, we’d give it a 9/10 score. On the other hand, ChatGPT-4o’s score should be 7.5/10.

This test will focus on academic summary creation. Since academic summaries are created mostly by students, we’ll tailor the prompt to them.

That said, the purpose of this test is to evaluate the models' ability to summarize academic content from an essay for a student audience. All while making complex ideas accessible. We’ll summarize a lengthy essay on the topic “Global Warming: Causes, Effects, and Solutions.”

The prompt that we’ll use for both chatbots is as follows.

Prompt:

“Please provide a concise academic summary of this essay. Focus on the main arguments, supporting evidence, and conclusion."

Providing the prompt to ChatGPT-4o, we got a lengthy and highly elaborate summary again. In fact, it was so long that we had to see if it didn’t just recycle the original text. After asking it to revise the summary and make it more concise, here’s the final output.

ChatGPT-4o Output:

On the other hand, Gemini Advanced came up with a good and concise summary without the need for any revision. Here’s its output.

Gemini Advanced Output:

We can see that both models tried to generate a summary that fulfills our request of focusing on the main argument, supporting evidence, and conclusion.

However, it is worth mentioning that ChatGPT-4o created an output that was a bit too concise and straightforward. It summarized the main points without much detail. Since the essay was quite long, there was no way it could’ve generated such a short summary without omitting a few important details.

The main arguments about the causes (greenhouse gases), effects (rising temperatures, melting ice caps, rising sea levels, extreme weather), and solutions (renewable energy, policy changes, public awareness) were all covered.

But all this was given to us when we asked the model to revise its output. The one we got the first time around was a bit too long and lackluster. It seems like the outputs are either too long or too short with ChatGPT-4o.

Contrastingly, as mentioned, Gemini Advanced created a more comprehensive academic summary in the first go, without any revisions. It captured the main arguments, supporting evidence, and conclusions in a detailed and structured manner without omitting anything. This aligns well with the academic requirements of summarizing an essay. Even the date of the publication was also mentioned here.

As evident from the discussion, Gemini Advanced is the winner here as well. It fulfilled our request better and created a compelling summary that covered every aspect of the essay in detail.

However, when it comes to which summary is easier to understand, ChatGPT-4o’s is a bit ahead. But if we’re being honest, that’s only because it is shorter and includes fewer details. Considering this, we’d give it a readability score of 8/10 and the summary generated by Google Gemini a 7/10.

Now comes the third test of the language models. It will focus on the abstract generation and the audience will obviously be students or researchers.

The purpose of this test is to assess how well the models can generate abstracts for academic papers and capture the essence of the research. The research paper that we’ll be using to summarize is named “Analysis of full-waveform LiDAR data for forestry applications: a review of investigations and methods.”

This paper explores the use of full-waveform (FW) LiDAR, a remote sensing technology, in forestry applications. That said, the prompt we’ll be using to generate its abstract from both language models is as follows.

Prompt:

“Generate an abstract for this academic paper. Summarize the purpose, methods, results, and conclusions succinctly. Also, include the mathematical data from the paper to add context.”

Inputting the prompt to ChatGPT-4o, the pattern of revision continued here as well. At first, we got more of like a recap of the research rather than its abstract. We had to remind ChatGPT-4o that it’s an abstract that we’re looking for. After that, here’s the result.

ChatGPT-4o Output:

On the other hand, here’s the abstract Gemini Advanced provided us with against the same prompt. It did that in the first go yet again.

Gemini Advanced Output:

It is safe to say that both ChatGPT-4o and Gemini Advanced came up with a good and comprehensive abstract. Even if ChatGPT did it after a revision, the output is quite good. However, when comparing the two, the one generated by OpenAI’s model lacks a bit.

ChatGPT-4o’s abstract starts by telling us what the paper explores. While we did ask it to generate a concise one, we didn’t know it would omit contextual information. On the other hand, Gemini Advanced starts its abstract by providing us with an overview of what FW LiDAR technology is and what it is used for in this specific case.

Besides this, ChatGPT also failed to present the advantages of using FW LiDAR that were presented in the paper, leading to much less depth in the abstract. This key information was not omitted in Gemini’s version.

Lastly, you can see that Gemini integrated a specific equation related to the LiDAR data. This improves the technical element of the abstract, making it more informative for readers interested in the mathematical aspects of the study. ChatGPT does mention the height estimation improvements and significant correlation values for forest metrics. It doesn’t include a specific example of vertical resolution calculation using an equation.

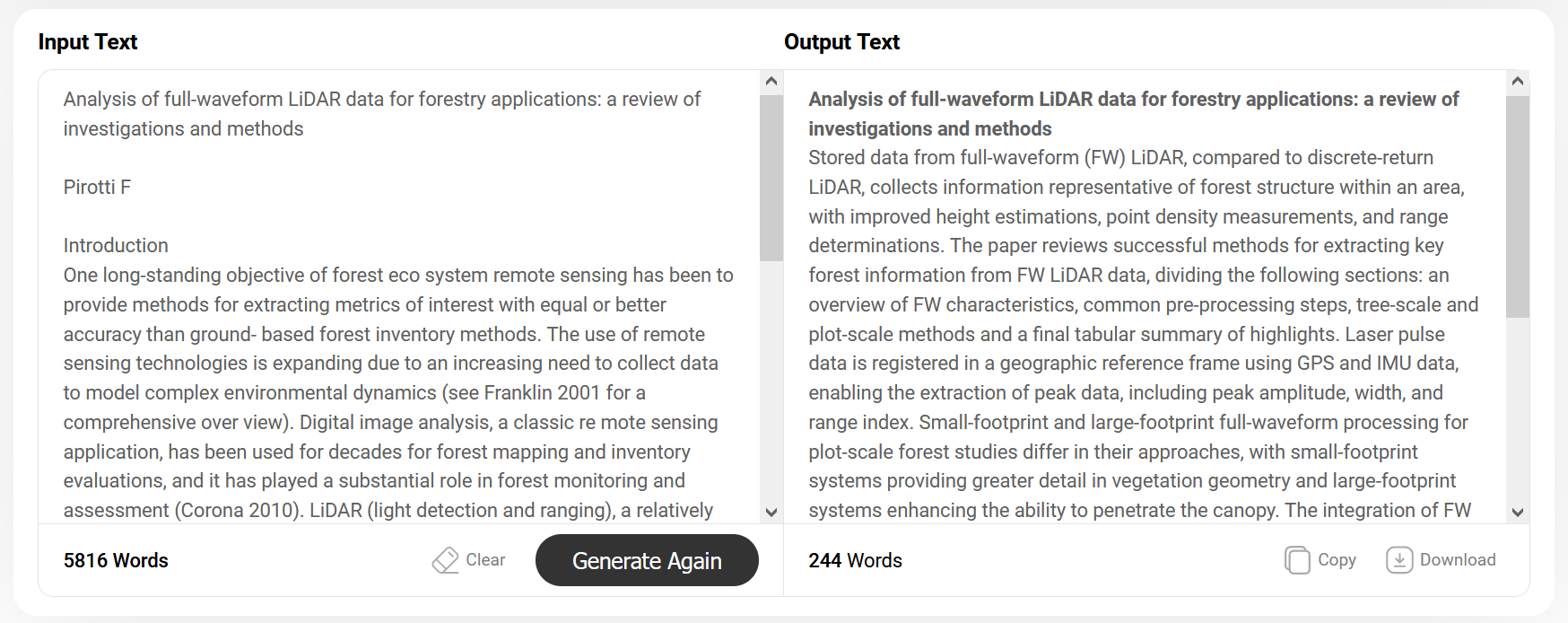

To give you a better understanding of how both models performed. We’ll compare them to the output of a tool that was made solely to generate abstracts: the Abstract Generator by Summarizer.org. Its output is as follows.

Since you can’t see the full output, we’ll tell you the details ourselves. This abstract is a bit longer than what both language models created. It conveys everything about the paper quite well. But there is no mathematical equation here as well. This means Gemini Advanced performed a bit better even than this tool.

Based on the comparison, we are confident to say that Gemini Advanced demonstrated superior comprehensiveness and detail in the abstract it generated. This integration of detailed information makes Gemini Advanced the winner in this test as well.

While its summary was good enough for it to be crowned the winner. It was not as readable as ChatGPT-4o’s. OpenAI’s model tended to include fewer details, causing a bit better readability, like before. We’d give ChatGPT’s summary a 9/10 for readability here and Google Gemini an 8.5/10.

It is widely known that conclusions are sort of a summary as well. They have to be included in almost every type of write-up. This is why, our fourth test will focus on conclusion generation.

The main purpose of this test is to evaluate each model’s ability to summarize a blog post and present its conclusion. That said, the blog we’ll be using for this test is titled “How to Write Appealing B2C Marketing Content?”

Having said that, the prompt we’ll use to generate conclusions from both chatbots is the following one.

Prompt:

“Conclude this blog post concisely while highlighting the main takeaways. Mention the key points, implications, and any actionable advice provided in the post.”

First, we’ll run this through ChatGPT-4o. The conclusion generated by it can be seen below.

ChatGPT-4o Output:

Conversely, what Gemini Advanced came up with is as follows.

Gemini Advanced Output:

Right off the bat, it can be seen that ChatGPT-4o generated a much more concise conclusion than Gemini.

OpenAI’s language model is more straightforward and directly lists the tips and their benefits in the conclusion. The words it has used are also much simpler than Gemini Advanced. Words like “multi-faceted” and complex sentence structures make the conclusion generated by Google’s model a bit hard to read.

Additionally, ChatGPT-4o ends with a strong call to action, encouraging businesses to consider professional assistance, and making the advice more practical and immediately actionable.

Gemini also mentions professional assistance but does so in a more abstract manner. This may not be as compelling for the reader.

To take things a step further, we’ll generate a conclusion for the same blog post from a tool that was built solely for this purpose, just like we did in the previous test. The tool in question is the Conclusion Generator by Summarizer.org. We’ll then use its conclusion as a reference point to see how well the language models performed against it. Here’s the result of the tool:

We can see that this conclusion effectively covers everything and is concise, like ChatGPT-4o. Google Gemini, on the other hand, comes in second place here as the outputs of both Summarizer.org and OpenAI’s chatbot are better.

Taking the comparison into consideration, ChatGPT-4o, for the first time in this post, wins this round. It provides a more concise, clear, and actionable conclusion. The main takeaways are effectively highlighted and directly connected to the benefits, making them more impactful.

Not only did it pull ahead in these things but its conclusion was much easier to understand. We would give it a 10/10 readability score here. Google Gemini is right behind with an 8/10.

Our next test will focus on the general public. Since news articles are read by almost everyone, it is worth summarizing one to see how well the models perform against each other.

That being said, the main purpose of performing this test is to see how well the models can summarize news articles for a broad audience, highlighting key facts and events. “A Town’s Unlikely Hero” is the title of the news article that we’ll be summarizing.

To instruct the chatbots, we’ll input the following prompt.

Prompt:

“Provide a concise summary of this news article. Focus on the main events, key facts, and overall significance. Also, ensure the summary is clear and easy to understand for a general audience."

With ChatGPT-4o, the following output was generated.

ChatGPT-4o Output:

Gemini Advanced, on the other hand, provided us with the following summary.

Gemini Advanced Output:

Just by looking at the two outputs, we can see that Gemini Advanced managed to create a shorter summary of the provided news article.

The detail level in ChatGPT-4o’s summary is good but it is a bit too much. There was absolutely no need to include things like “On a calm evening” and what Whisker's tale highlights in the summary.

On the other hand, Gemini Advanced maintained a good length of the summary by only including things that were absolutely necessary. It captures the essential facts - Whiskers' actions, the Johnson family's escape, and the adoption of Whiskers effectively. The summary is also much easier to understand for a general audience as it is to the point.

While both summaries capture the essence of the news article, Gemini Advanced’s output is more aligned with our prompt. It is concise and focused on the main events, key facts, and overall significance while ensuring clarity for a general audience. Therefore, it takes the cake.

Additionally, its summary is also easier to comprehend for a general audience, mainly because there aren’t too many details and the whole thing is to the point. We’d give it a readability score of 10/10. Whereas ChatGPt-4o only managed to score a 9/10.

Healthcare providers often have to summarize patients’ medical reports to go through them quickly, especially when they’re dealing with multiple at the same time.

This is what this test will be all about. We’ll summarize a medical report of a patient named “Mr. Tan Ah Kow” using both language models. Our purpose is to determine the models' ability to summarize medical information for healthcare providers. All while ensuring clarity and accuracy.

The prompt for both ChatGPT-4o and Gemini Advanced will be the following one.

Prompt:

“Generate a concise medical summary of this case report. Highlight the patient history, diagnosis, treatment, and outcomes. Ensure the summary is clear and precise, suitable for healthcare professionals."

With this prompt, ChatGPT-4o generated the following result.

ChatGPT-4o Output:

Secondly, here is what Gemini Advanced came up with.

Gemini Advanced Output:

One can immediately see the pattern of conciseness being repeated here by Gemini Advanced. The summary it came up with is almost half of that was generated by ChatGPT-4o.

ChatGPT provides more specific details about the patient's hospital visits, dates, and types of follow-up treatments. It also offers a thorough account of the patient’s cognitive tests and outcomes.

On the other hand, Gemini Advanced covers the basic things and provides a good overview of the patient history. It is adequate but we think the details are a bit less for healthcare providers. For example, Gemini mentions that the patient is suffering from “Cognitive decline” but it doesn’t say what sort of decline. Contrastingly, ChatGPT provided details like the patient is unable to do other things except for feeding.

Since a medical summary has to be read by medical professionals who have to perform treatments, they need as much information as they can about the patient.

Considering what we just said, ChatGPT-4o fulfills the request better by providing a more comprehensive summary.

It includes detailed patient history, specific treatment dates, and comprehensive outcomes. This level of detail is more suitable for healthcare professionals who need extensive information for treatment planning.

However, the medical summary it generates isn’t as easy as Google Gemini. But that doesn’t matter much as medical professionals probably prefer comprehensiveness over easiness anyway. The readability score of ChatGPT-4o in this test is 8/10, whereas, Gemini Advanced is one point ahead with a 9/10. The reason for that is less details yet again.

Objective | ChatGPT-4o Output | Gemini Advanced Output | Winner |

1: Executive Summary Determine which model better distills key business information for decision-makers. | Detailed summary but needed two revisions for conciseness. | Created a good summary in the first attempt without revisions. | ✅ Gemini Advanced |

2: Academic Summary Evaluate the models' ability to summarize academic content from an essay for students. | Initially too long; the concise version lacked some important details. | Provided a comprehensive summary in the first go without omissions. | ✅ Gemini Advanced |

3: Abstract Generation Assess how well the models generate abstracts for academic papers. | Needed a revision; lacked some contextual information and technical detail. | Generated a comprehensive and detailed abstract on the first try, including key equations. | ✅ Gemini Advanced |

4: Conclusion Generation Evaluate each model’s ability to summarize a blog post and present its conclusion. | Provided a concise, clear, and actionable conclusion. | More detailed but less readable and actionable. | ✅ ChatGPT-4o |

5: News Summary See how well the models can summarize news articles for a broad audience. | Detailed but included unnecessary information. | Concise, focused on main events, and clear for a general audience. | ✅ Gemini Advanced |

6: Medical Summary Determine the models' ability to summarize medical information for healthcare providers. | Provided a comprehensive and detailed summary suitable for healthcare professionals. | Concise but lacking in specific details needed by healthcare professionals. | ✅ ChatGPT-4o |

In this post, we saw the comparison between two famous LLMs: ChatGPT-4o and Gemini Advanced to see which one summarizes text the best. In our testing, we concluded that Gemini Advanced won 4 out of 6 tests.

Gemini Advanced excels in providing quick, concise summaries without the need for revisions, making it ideal for short and to-the-point summary creation.

However, ChatGPT-4o shines in delivering detailed and comprehensive summaries, particularly suited for scenarios demanding extensive information. The choice between the two models depends on the specific needs of the user. Gemini Advanced is preferable for efficiency and ChatGPT-4o for thoroughness.